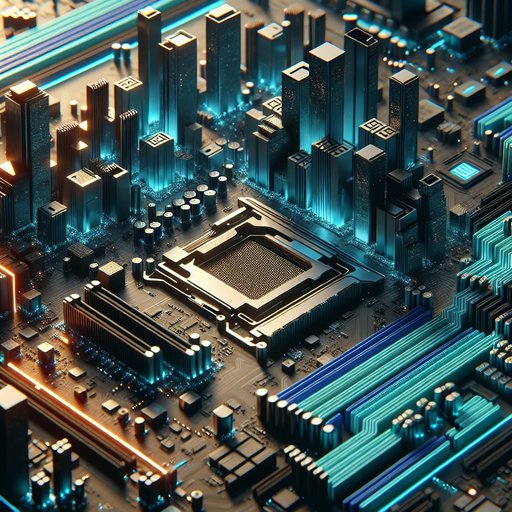

Modern motherboards have transformed from simple host platforms into dense, high-speed backplanes that quietly reconcile conflicting requirements: ever-faster I/O like PCIe 5.0, soaring transient power demands from CPUs and GPUs, and the need to integrate and interoperate with a sprawl of component standards. This evolution reflects decades of accumulated engineering discipline across signal integrity, power delivery, firmware, and mechanical design. Examining how boards reached today’s complexity explains why form factors look familiar while the underlying technology bears little resemblance to the ATX designs of the 1990s, and why incremental user-facing features mask sweeping architectural changes beneath the heatsinks and shrouds.

Elon Musk and Grok recently described a bold future: smartphones becoming “dumb boxes” that only run AI. No apps, no iOS or Android. Just a pocket-sized brain generating every pixel and sound in real time.

The claim sounds magical, but it misses reality. An operating system like iOS or Android cannot be replaced by a large language model. An OS manages hardware, memory, processes, and security. These are deterministic functions. AI models, by contrast, work with probabilities. They are powerful for interpretation and creativity, but not for the precise control needed to keep systems reliable and safe.

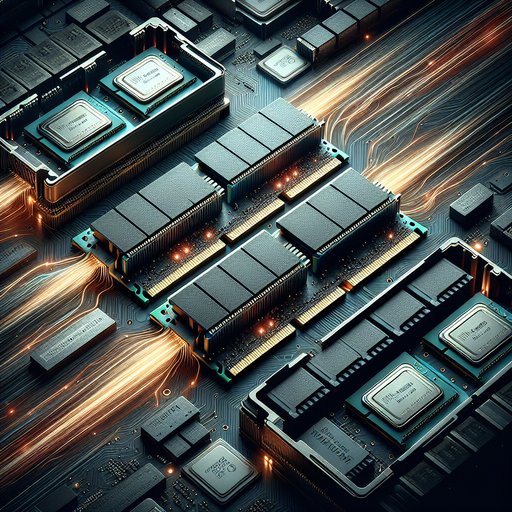

Main memory sits at a pivotal junction in every computer system, bridging fast CPUs and accelerators with far slower storage. The evolution from DDR4 to DDR5 and the exploration of storage-class memories like 3D XPoint illustrate how designers pursue more bandwidth and capacity while managing stubborn latency limits. As core counts rise, integrated GPUs proliferate, and data-heavy workloads grow, the difference between a system that keeps its execution units fed and one that stalls is often determined by how memory behaves under pressure. Understanding what changed in the DDR generations, and how emerging tiers fit between DRAM and NAND, clarifies why some applications scale cleanly while others hit ceilings long before the CPU runs out of arithmetic horsepower.

Linux is not one operating system but a family of distributions that shape the same kernel into different experiences. From Ubuntu’s emphasis on an approachable desktop to Arch’s bare‑bones starting point, each distro encodes a philosophy about simplicity, control, stability, and velocity. Those choices ripple outward through package managers, release models, security defaults, and hardware support, influencing how developers write software and how organizations run fleets at scale. Exploring this diversity reveals how a shared open‑source foundation can support both newcomers who want a predictable workstation and experts who want to design every detail, while continually pushing the state of the art in servers, cloud, and embedded systems.