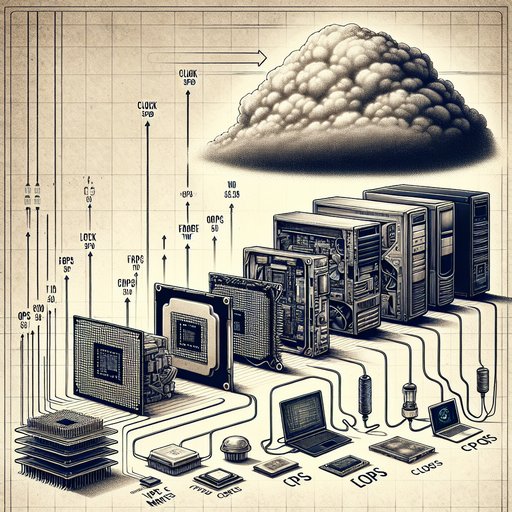

Measuring computer performance has never been a one-number affair, yet the industry has repeatedly tried to reduce it to a headline metric. Early eras prized MIPS and clock speed, then HPC crowned FLOPS, and now users compare gaming frame times, web responsiveness, and battery life. Each shift mirrors a deeper technological change: from single-core CPUs to heterogeneous systems, from local disks to cloud services, and from batch throughput to interactive latency. Understanding how and why benchmarks evolved reveals not only what computers do well, but also why traditional metrics often fail to predict real-world experience.