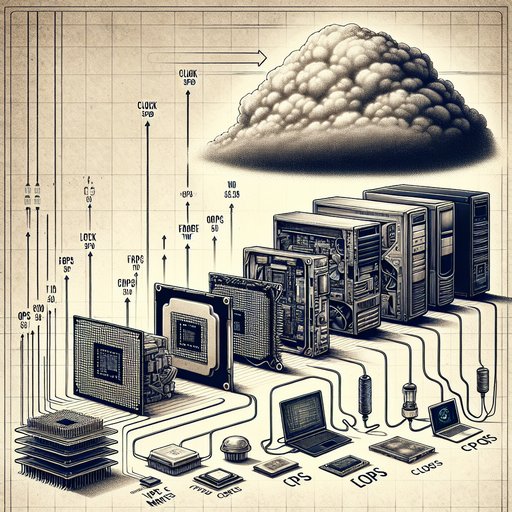

Measuring computer performance has never been a one-number affair, yet the industry has repeatedly tried to reduce it to a headline metric. Early eras prized MIPS and clock speed, then HPC crowned FLOPS, and now users compare gaming frame times, web responsiveness, and battery life. Each shift mirrors a deeper technological change: from single-core CPUs to heterogeneous systems, from local disks to cloud services, and from batch throughput to interactive latency. Understanding how and why benchmarks evolved reveals not only what computers do well, but also why traditional metrics often fail to predict real-world experience.

Performance metrics matter because computers have become both more diverse and more specialized over time. A desktop rendering frames, a phone loading a web page, and a data center serving millions of queries emphasize different bottlenecks. As architectures added more cores, wider vectors, larger caches, and accelerators, a single scalar number stopped mapping cleanly to user-perceived speed. The evolution of benchmarks tracks these shifts and explains how measurement can guide, or mislead, design and purchasing decisions.

MIPS, or millions of instructions per second, captured early attention because it was simple and seemingly comparable. In practice, an instruction on one ISA can do more or less work than on another, making cross-architecture MIPS comparisons misleading. Compiler quality, instruction mix, and microarchitectural features like out-of-order execution and caches all move MIPS in ways that barely relate to task completion time. The industry learned that IPC and clock rate alone do not determine how quickly a given workload finishes.

FLOPS rose to prominence in high-performance computing because many scientific codes are dominated by floating-point math. Benchmarks like LINPACK optimized for dense linear algebra became the basis for the TOP500 list, rewarding machines that push extreme FP throughput. That emphasis catalyzed vector units and GPU acceleration, but it also highlighted a gap: many HPC workloads are limited by memory bandwidth and latency, not raw FLOPS. Efforts like HPCG emerged to represent more memory-bound patterns, reminding practitioners that peak rates matter only when a program can feed the math units.

Application-focused suites tried to balance comparability and realism. SPEC CPU standardized compiler flags and workloads to compare integer and floating-point performance across generations, while SPECjbb and SPECjEnterprise stressed Java servers and middleware. Database benchmarks such as TPC-C and TPC-H measured throughput and analytical performance, influencing storage layouts, caching strategies, and concurrency control. These suites improved rigor and reproducibility, yet vendors still tuned aggressively for them, sometimes yielding spectacular scores that did not translate into better performance on a customer’s actual mix of queries and data.

On client systems, synthetic microbenchmarks gave way to scenario-driven tests that mirror daily use. Web responsiveness is captured by harnesses like Speedometer, which exercise DOM updates, timers, and JavaScript engines across frameworks. Content creation and productivity rely on tools like Cinebench and PCMark to represent rendering, encoding, and office workflows, while cross-platform tests such as Geekbench aim for portability at the cost of strict workload control. Storage and memory enter the picture more visibly too, as random I/O latency, queue depth behavior, and cache misses can dominate experiences like launching apps or compiling code.

Gaming benchmarks underscore why averages can hide the story. Frames per second offer a quick gauge, but frame time distributions, 1% lows, and 0.1% lows reveal stutter and pacing issues that players actually notice. Modern engines juggle asset streaming, shader compilation, physics, and AI across CPU threads and GPU queues, so bottlenecks shift scene by scene. API choices and driver maturity matter, and technologies like DirectStorage change whether performance is bound by the GPU, CPU, or storage.

A system that posts a high average FPS but suffers erratic frame times will feel worse than a lower-FPS machine with consistent pacing. Traditional metrics often fail because real workloads stress the whole system, not just a single unit of compute. Throughput and latency pull in different directions, and tail latency—those p95 and p99 delays—can define user satisfaction or breach service-level objectives. Mobile and data center platforms also live under power and thermal budgets, making performance per watt, sustained speed, and throttling behavior as important as peaks.

The memory wall, Amdahl’s Law, operating system scheduling, and background tasks further blur the line between a chip’s theoretical capability and the time it takes to finish a job. A better approach combines rigor with relevance. Representative, end-to-end scenarios alongside targeted microbenchmarks expose where the bottlenecks are and how improvements translate to user outcomes. For AI, MLPerf categorizes training and inference across models and datatypes, while in gaming, publishing frame time traces with clear settings and capture methods builds trust.

For general computing, measures like time-to-first-interaction, compile-time to completion, or battery-normalized throughput often align with what people actually value. No single score can summarize all of this, but a transparent suite of metrics can clarify trade-offs and guide both design and purchasing with fewer surprises.