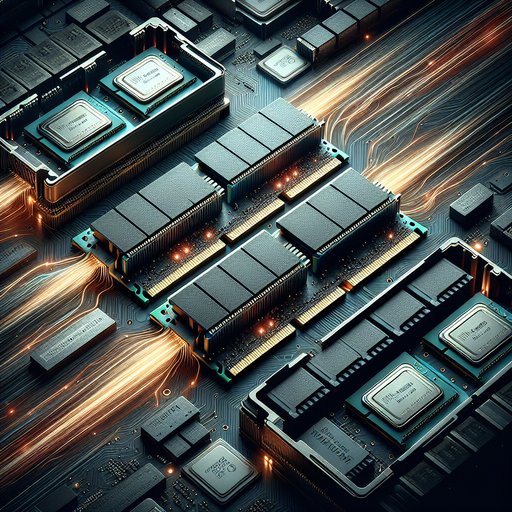

Main memory sits at a pivotal junction in every computer system, bridging fast CPUs and accelerators with far slower storage. The evolution from DDR4 to DDR5 and the exploration of storage-class memories like 3D XPoint illustrate how designers pursue more bandwidth and capacity while managing stubborn latency limits. As core counts rise, integrated GPUs proliferate, and data-heavy workloads grow, the difference between a system that keeps its execution units fed and one that stalls is often determined by how memory behaves under pressure. Understanding what changed in the DDR generations, and how emerging tiers fit between DRAM and NAND, clarifies why some applications scale cleanly while others hit ceilings long before the CPU runs out of arithmetic horsepower.

Memory matters because performance is frequently bounded not by compute throughput but by how quickly data can be delivered to compute units. The industry’s steady climb in CPU parallelism and vector width increased the demand for concurrent, high-bandwidth memory transactions. Meanwhile, the physical realities of DRAM cells kept fundamental access latency roughly flat in nanoseconds across generations, creating a tension that architects resolve with more channels, deeper queues, and smarter scheduling. The transition from DDR4 to DDR5 is best read as a response to this imbalance rather than a simple speed bump.

DDR4 established the baseline for modern platforms with data rates commonly ranging from 2133 to 3200 mega-transfers per second and a 64-bit channel per DIMM. Bank groups and per-bank refresh, introduced and refined across DDR3/DDR4, improved concurrency by allowing multiple operations to progress with fewer conflicts. At the same time, typical absolute access latencies for random reads remained on the order of tens of nanoseconds, meaning software perceived performance gains mainly when parallelism or locality improved. As servers moved from four to six to eight memory channels per socket, aggregate bandwidth scaled to keep pace with multi-core processors, even when single-access latency barely budged.

DDR5 raises headline bandwidth by design, with JEDEC-standard data rates starting at 4800 MT/s and extending beyond 6400 MT/s as platforms mature. Each UDIMM is split into two independent 32-bit subchannels, increasing command efficiency and reducing wasted bus time when transferring small bursts. The burst length doubled to 16 and the number of bank groups increased, expanding the pool of open pages and opportunities for the controller to schedule around conflicts. On-module power management via a PMIC and the addition of on-die ECC for cell reliability improve signal integrity and yield without replacing end-to-end ECC where required.

Despite the bandwidth leap, DDR5 does not magically lower true latency; in fact, early modules often have higher cycle counts for timings, and absolute nanosecond latency can be similar to or slightly worse than fast DDR4. A simple comparison illustrates the point: DDR4-3200 with CL16 yields roughly 10 ns of CAS latency, while DDR5-6000 with CL36 sits around 12 ns, before considering tRCD and tRP. Modern memory controllers mitigate this by extracting memory-level parallelism, keeping many requests in flight and exploiting the expanded bank structure to hide individual access delays. Workloads that can issue concurrent streams, such as integrated graphics or large data scans, benefit greatly from DDR5’s bandwidth even if the first-byte latency is unchanged.

Server platforms magnify these effects by pairing DDR5 with more memory channels and higher-capacity DIMMs, increasing both bandwidth and footprint for in-memory datasets. Newer CPUs expose eight or more DDR5 channels per socket, and stacked-die techniques raise per-DIMM capacities, letting databases, analytics frameworks, and virtualization hosts keep more hot data close to compute. Improved power delivery on the DIMM helps maintain signal integrity at higher speeds, which is critical in densely populated server boards. The end result is higher sustained throughput for memory-intensive, parallel workloads, while single-threaded, random-access code sees more modest gains unless software improves locality and prefetchability.

3D XPoint, commercialized by Intel as Optane, showed a complementary path by inserting a persistent, byte-addressable tier between DRAM and NAND. In DIMM form, its read latencies were several times that of DRAM but far below NAND, and in NVMe form it delivered single-digit microsecond accesses, dramatically faster than typical SSDs. Data center deployments used it to expand memory economically and to accelerate metadata, indices, and write-heavy logs via higher endurance than NAND. Though both Intel and Micron ceased 3D XPoint production by 2022, its real-world use validated memory tiering: placing large, slightly slower media close to the CPU reduces pressure on DRAM, cuts I/O amplification, and improves tail latencies for data-intensive services.

The practical impact of bandwidth and latency varies with workload characteristics. Integrated GPUs scale almost linearly with memory bandwidth because they lack dedicated VRAM, making DDR5’s higher throughput especially valuable for gaming on iGPUs and for GPU-like compute on APUs. AI inference with large embeddings, in-memory analytics, and graph traversal benefit when many outstanding requests can be overlapped, whereas branchy, pointer-chasing code that defeats prefetchers remains latency-bound. Developers see the difference in profiling: memory stall cycles drop when concurrency and streaming patterns are present, while single-lane pointer walks remain limited by the nanoseconds of first access.

Looking forward, the hierarchy keeps widening: HBM attached to accelerators delivers hundreds of gigabytes per second per stack for massively parallel kernels, while DDR5 anchors general-purpose compute with broad capacity and cost efficiency. Coherent interconnects like CXL add new ways to attach expanded memory at somewhat higher latencies, enabling pooling and tiering without replatforming applications. These trends shift performance engineering toward topology-aware allocation, NUMA-conscious data placement, and algorithms that maximize contiguous, parallel access. The winners are systems that treat memory not as a monolith but as a set of tiers, each exploited for what it does best.

The throughline from DDR4 to DDR5 and through storage-class memory is clear: bandwidth and parallelism are scaling aggressively, while intrinsic DRAM latency moves slowly. Hardware answers with more channels, banks, subchannels, and smarter controllers; software answers with data layouts and access patterns that convert that structure into useful work. By matching workloads to the right tier—fast DRAM for hot, random data; high-bandwidth DDR5 for parallel streams; persistent memory for capacity-close caching—architects can turn potential bottlenecks into throughput. The evolution is less about chasing a single metric and more about balancing the entire pipeline so compute, memory, and storage pull in the same direction.