The new creative handshake happens in the half‑light of screens: a human sketches intent in a sentence, and a model replies with images, copy, code, or melody. What began as a research curiosity has slipped into daily workflows, remapping how ideas move from brief to product and how businesses price speed itself. Generative AI is not just a tool; it behaves like a studio assistant with infinite stamina, an analyst who reads everything, a sales rep who never sleeps. Its rise is neither sudden nor simple. It echoes decades of experiments in machine creativity, now amplified by transformers and cheap cloud muscle, and it forces a negotiation—between imagination and automation, originality and scale, authorship and efficiency—that will define the next economy.

In a small design studio that smells faintly of marker ink and burnt coffee, a creative director types a prompt into a text‑to‑image model for the third time. The first render lands too slick, the second too earnest. On the fourth try, the model returns a tableau that strikes the right awkward sweetness, and the room exhales. The junior designer doesn’t open stock sites; the account manager doesn’t call in a rush illustrator.

Instead, they iterate live with a machine that offers endless variations without complaint. It feels less like outsourcing and more like a new kind of conversation, one where intent is drafted in language and the answer returns in color. By morning, those comps have become a pitch deck, stitched with AI‑drafted copy and a sizzle reel cut from synthetic b‑roll. A few blocks away, a CFO watches cycle time metrics dip again: briefs resolved in hours, A/B tests spun up in minutes, prototypes shipped before lunch.

The speed doesn’t just change outputs; it changes behavior. Teams ask bolder questions because the penalty for a bad idea shrinks. Marketing and product begin to share a dashboard. The conversation turns from whether to use generative tools to where to place them in the assembly line of thought.

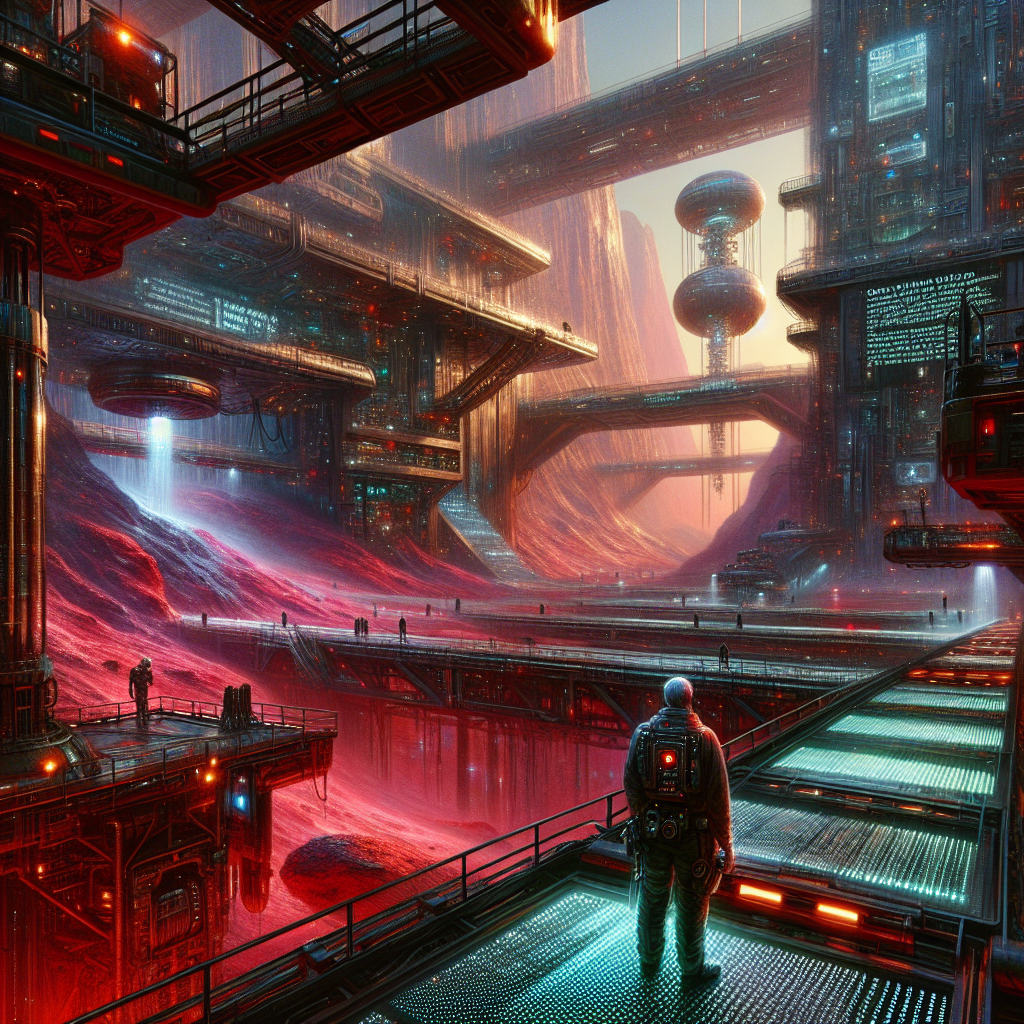

This moment is oddly familiar if you remember the early machine muses. In 1957, Lejaren Hiller and Leonard Isaacson fed rules to the ILLIAC I and pressed print on the Illiac Suite, a score generated by a mainframe that critics alternately derided and celebrated. By the 1970s, Harold Cohen’s AARON drew autonomous line art that filled galleries with plots that hummed like steady rain. Engineers toyed with Markov chains and rule‑based grammars; artists taped pen plotters to desks.

Businesses largely shrugged. These systems were novelties—brilliant, brittle, and destined to be told in footnotes rather than balance sheets. The oxygen arrived in the 2010s, as deep learning leapt from papers to platforms. AlexNet’s shock victory on ImageNet in 2012 reset ambitions.

In 2014, generative adversarial networks embraced a creative antagonism—one network creating, one critiquing—that could dream in images. Style transfer made content wear the texture of Van Gogh. Then came 2017 and the transformer, a simple idea—pay attention to everything at once—that became an engine for context. Suddenly, language models didn’t just autocomplete; they could sustain a thought.

The lab tricks began to look like workflows. By 2019, models like GPT‑2 were writing paragraphs that felt less like parlor tricks and more like drafts. GPT‑3 widened the aperture in 2020, and programmers discovered pair programming with a bot that could name variables better than they could. Copilots whispered tests and refactors; marketing teams used generated copy to seed a hundred variations.

It wasn’t perfect. Hallucinations drifted in like lint. But companies learned to frame them, wrapping models with retrieval systems, guardrails, and human review. A new habit took hold: ask the machine first, then edit with intention.

Images and audio followed. DALL·E and its cousins turned text into pictures persuasive enough to win pitches and start legal arguments. Open‑source diffusion models let a thousand styles bloom, while enterprises looked for cleaner provenance. Adobe launched models trained on licensed libraries; stock agencies inked deals to make licensing legible.

The workbench expanded: storyboards, product shots, mood reels, voiceovers. A fresh role emerged—the prompt director—part poet, part producer, coaxing the model into the company’s voice. Behind the scenes, lawyers circled phrases like "training data" and "derivative," and content provenance standards crept into production pipelines. Inside firms, the early experiments became infrastructure.

Customer service swarms with assistants that summarize calls, propose replies, and route complexity up the chain. Research teams run literature reviews that would have taken weeks; product managers interrogate feature requests with models that remember context across entire backlogs. The invisible plumbing matters: retrieval‑augmented generation to keep answers grounded, connectors to CRM and ERP to keep models honest, dashboards to track drift and cost. While GPUs become the new scarce commodity, facilities managers factor heat and water into their AI budgets.

The model may live in the cloud, but it leaves fingerprints in the utility bills and compliance reports. The most interesting shifts now sit at the seam between creativity and operations. A retailer’s photo studio links a product database to a generative pipeline, rendering seasonal imagery in minutes and swapping styles on demand. A video game studio spins NPC dialogue from brand bibles and lore, keeping writers in the loop as directors rather than stenographers.

In a factory, design software translates prompts into manufacturable parts and hands them to robots that test, measure, and report back. Small models, fine‑tuned on proprietary tone and process, run at the edge in stores and warehouses, making decisions where latency once killed ambition. Agents coordinate across APIs, booking campaigns and negotiating ad slots while humans set the taste and the guardrails. Under the rush, a quieter negotiation is happening in the craft.

Painters who once guarded their brushes now curate datasets and build mood boards that a model can metabolize. Copywriters become editors and ethicists, deciding which voices belong in the brand chorus and which are off‑limits. Engineers weigh speed against reproducibility, writing prompts as carefully as code. The work doesn’t vanish; it migrates.

There are days when outputs converge toward the same bland median, and days when a synthetic suggestion unlocks a human risk that a board might finally accept. The business math adjusts in parallel. Valuations whisper about "model arbitrage," the spread between expensive frontier capabilities and the cheaper distilled versions fit for a team’s needs. Procurement begins to ask not just about benchmarks but about indemnity, data governance, and content credentials.

Regulators test watermarking and transparency rules, while unions and guilds draft clauses about synthetic labor. The competitive advantage lives less in access to a particular model and more in the choreography around it: the data moat, the cultural taste, the discipline to say no when the machine is confidently wrong. What happens to originality when every desk has a collaborator that has seen everything? One answer is that originality becomes a question of constraints and curation.

The teams that flourish are those that treat models like mirrors that reflect dominant patterns and then deliberately step away from the reflection. They log their prompts like they once logged experiments. They teach the machine their house style and reserve the right to break it. Some mornings the office is quiet enough to hear the HVAC hum against the rack heat, and a model sits idle, waiting for someone to ask a better question.

That, more than the size of a context window or the latest diffusion trick, is the hinge: how we frame problems, what provenance we demand, where we slow down. Generative AI has become the new default studio intern and analyst, cheap and eager. Whether it becomes a partner worthy of creative credit or stays a clever appliance will depend on choices that look mundane in the moment—naming a dataset, signing a contract, writing a prompt—and add up to the culture we build around the machine.