Sleep is not downtime; it is active biology that orchestrates learning, metabolic balance, immune resilience, and healthy aging. Yet modern life—late-night screens, irregular schedules, round-the-clock work, and chronic stress—pushes sleep aside, with real health costs. Research consistently links inadequate or poor-quality sleep with impaired attention, mood disturbances, higher accident risk, and increased susceptibility to illness. Understanding how sleep works and adopting practical, evidence-informed strategies can help most people improve sleep quality, while recognizing that individualized guidance from healthcare professionals is essential when problems persist.

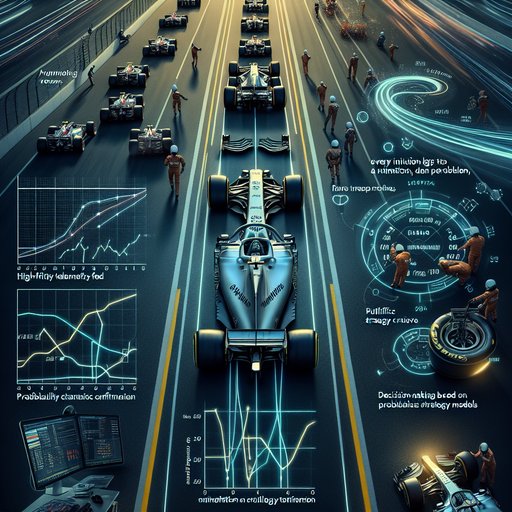

Formula 1’s transformation from intuition-led pit walls to analytics-driven decision centers is one of modern sport’s defining shifts. Live telemetry, high-fidelity tire monitoring, and probabilistic strategy models have turned every lap into a rolling optimization problem, where milliseconds and megabytes carry equal weight. The result is not just better-informed choices, but a new rhythm to races themselves—stint lengths flex to real-time degradation curves, pit windows open and close with traffic forecasts, and rain calls hinge on model confidence rather than gut feel. This evolution did not happen overnight; it grew from early data loggers into a tightly integrated ecosystem of sensors, software, and specialists that now shapes almost every move on track.

Linux is not one operating system but a family of distributions that shape the same kernel into different experiences. From Ubuntu’s emphasis on an approachable desktop to Arch’s bare‑bones starting point, each distro encodes a philosophy about simplicity, control, stability, and velocity. Those choices ripple outward through package managers, release models, security defaults, and hardware support, influencing how developers write software and how organizations run fleets at scale. Exploring this diversity reveals how a shared open‑source foundation can support both newcomers who want a predictable workstation and experts who want to design every detail, while continually pushing the state of the art in servers, cloud, and embedded systems.

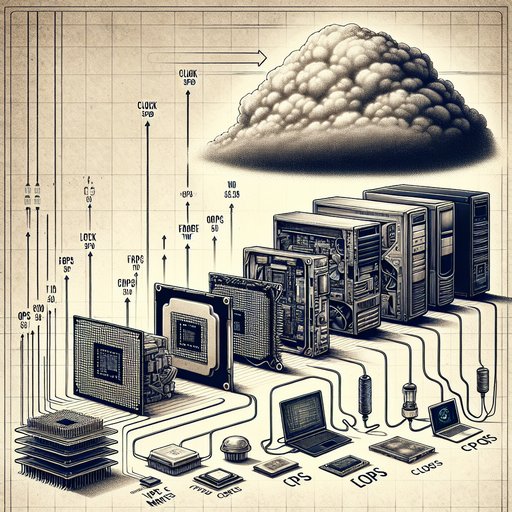

Measuring computer performance has never been a one-number affair, yet the industry has repeatedly tried to reduce it to a headline metric. Early eras prized MIPS and clock speed, then HPC crowned FLOPS, and now users compare gaming frame times, web responsiveness, and battery life. Each shift mirrors a deeper technological change: from single-core CPUs to heterogeneous systems, from local disks to cloud services, and from batch throughput to interactive latency. Understanding how and why benchmarks evolved reveals not only what computers do well, but also why traditional metrics often fail to predict real-world experience.