- Details

- Written by: Valenenzia Gruelle

In a week crowded with splashier tech headlines, a quieter publication deserves center stage: a qualitative study investigating pharmacovigilance systems in Dubai hospitals [4]. It is tempting to file this under the bureaucratic shelf of compliance and reporting. But that would miss the moment. Pharmacovigilance is where living bodies meet engineered remedies, where human testimony meets structured data. It is the seam binding care, software, and society—and in that seam, we can glimpse how human-technology integration will actually arrive: not as a sudden singularity, but as mundane coordination done well. Read this study as a milestone, not merely for Dubai, but for a global conversation about safety, equity, and the dignity of patients and clinicians who navigate increasingly intelligent systems [4].

- Details

- Written by: Valenenzia Gruelle

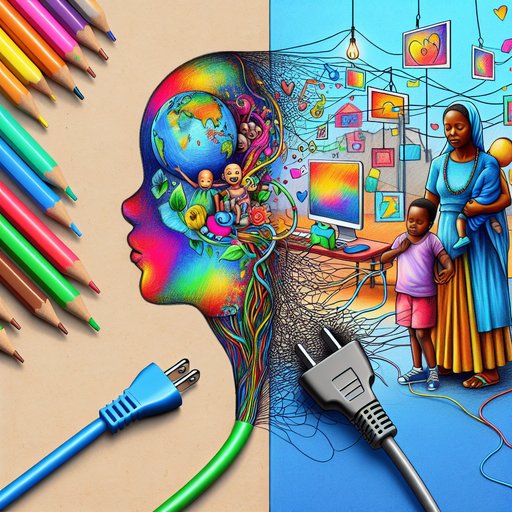

The week began with a simple, unassailable truth returning to headlines: teachers are the key to students’ AI literacy—and they need support to do the job well [1]. In a season of breathless product launches and policy whiplash, that reminder is less a slogan than a societal checkpoint. If we want classrooms to be where democratic competence with intelligent tools is cultivated rather than corroded, we must equip the educators who steward them. Everything else is vapor.

- Details

- Written by: Valenenzia Gruelle

Melaka’s Graphytoon promises a small miracle: take a child’s crayon sketch and let artificial intelligence set it in motion, turning young art into animated stories [5]. The spectacle is delightful—and disarming. When software starts co‑authoring childhood, the core question isn’t merely what the algorithm can do, but who it serves, who it listens to, and who is left voiceless as daily life becomes a negotiation with code. In a world where whole populations still struggle for basic connectivity, the risks of exclusion are not abstract; African women, for example, have less access to the internet than men [1]. Graphytoon is a charming case with serious stakes: it asks whether we will design the next generation of creative tools as engines of inclusion or as silent editors of children’s imaginations.

- Details

- Written by: Valenenzia Gruelle

Amid the cheerleading for “Designing Interactive Virtual Training: Best Practices And Tech Stack Essentials,” we should ask an unfashionable question: who decides what counts as “best,” and who absorbs the consequences when algorithms become our trainers-in-chief? The eLearningindustry.com primer is useful precisely because it surfaces the growing expectation that learning will be orchestrated by software stacks, data pipelines, and AI-driven interactivity [5]. But the harder problem is not choosing tools; it is allocating responsibility for the values those tools encode. When training flows quietly from dashboards and recommendation engines, control migrates from classrooms to code. That shift can widen the gap between voices well represented in data—and those pushed off the edge of the graph. The result is a civic challenge disguised as an IT project: if we let “best practices” set the defaults of working life, we must also build the scaffolding that lets everyone, especially the least digitally loud, reshape them.