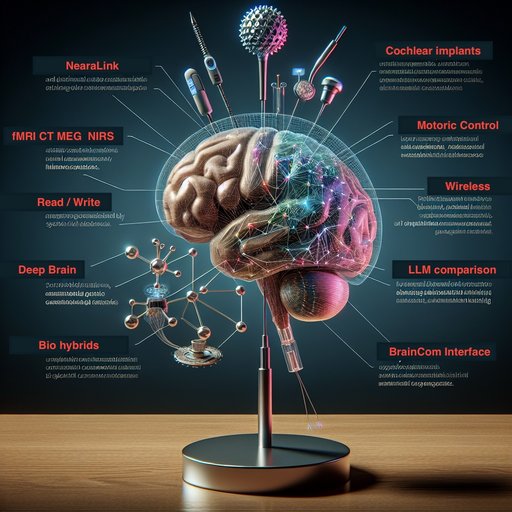

In our search—and perhaps even dream—to extend the human brain, we must ultimately learn how to both read from it and write to it. We already have technologies that connect our minds to machines in tangible ways. Cochlear implants restore hearing by transmitting sound information directly to the auditory nerve. Brain-controlled prosthetic hands and legs are becoming more common, and speech-assistive devices now allow those without a voice to communicate through thought-driven interfaces. These applications are functional and proven on a large scale, yet they mostly deal with sensory or motor functions. More abstract abilities, such as enhancing memory, improving logical reasoning, or even adding entirely new layers of cognitive function, remain just beyond our reach—but every year we get closer. There are already several paths toward this goal. Wired approaches use electrodes, both large arrays capable of reading or stimulating activity over broad areas of brain tissue, and ultra-small probes like those promised by Neuralink, designed to interface with individual neurons. There are also wireless technologies—ranging from MRI and CT scanning to emerging optical and electromagnetic techniques—that might soon allow us not only to monitor but to directly “write” information into the brain. And then there are biological hybrids: nutrient-rich scaffolds where living brain cells grow on or within a substrate that itself is connected, wired or wirelessly, to an external system. The crucial question is how sustainable the more invasive or hybrid approaches will be. Could they be implanted early in life and remain functional for decades? Or should we already be focusing our ambitions on non-invasive, wireless connections—perhaps something as simple, in appearance, as wearing a “thinking cap”?

The concept of reading from the brain is far more mature than the idea of writing to it. We have over half a century of experience with electroencephalography (EEG), which measures the brain’s electrical activity from the scalp. Modern brain–computer interfaces (BCIs) build on this foundation, using improved sensors and sophisticated algorithms to decode patterns of thought into commands for machines. For example, individuals with paralysis can control a cursor on a screen or a robotic arm through nothing but intention. The challenge is resolution: EEG is limited in how precisely it can localize signals within the brain. This is where invasive electrode arrays offer a leap in capability, as they record signals directly from the surface or even deep inside neural tissue, with much higher fidelity.

On the other side of the equation is writing to the brain—stimulating it to produce a desired perception, movement, or cognitive change. Cochlear implants are a prime example of this in practice, converting sound into patterns of electrical impulses that the auditory nerve interprets as meaningful sound. Deep brain stimulation (DBS) implants, another well-established technology, deliver targeted electrical pulses to treat conditions like Parkinson’s disease, reducing tremors and improving motor control. These successes give us a blueprint for influencing brain activity deliberately. Yet, extending these methods to influence abstract cognitive functions—enhancing memory capacity, accelerating learning, or introducing entirely new senses—requires far greater understanding and control than we currently possess.

Neuralink and similar ventures propose one pathway toward this future: densely packed arrays of microscopic electrodes inserted directly into the brain. Theoretically, these could read from and write to individual neurons, allowing an unprecedented level of precision. This approach could make it possible to store information directly in memory, alter emotional states at will, or link minds together in a network. However, it is also the most invasive method, raising questions about safety, longevity, and biocompatibility. Neural tissue reacts to foreign objects, and over years or decades, the brain may encapsulate or degrade implanted electrodes. Even if the technology works flawlessly at first, the physical reality of a lifetime implant is fraught with unknowns—will it need periodic replacement? Will scar tissue interfere with function? Will the immune system reject it in subtle ways over time?

For these reasons, many researchers are exploring less invasive but still high-resolution methods. Advances in imaging, such as functional MRI (fMRI), magnetoencephalography (MEG), and near-infrared spectroscopy (NIRS), are pushing the boundaries of what we can detect from outside the skull. These methods are non-invasive, and in principle, they could evolve into “reading hats” capable of decoding thoughts and intentions without surgery. Writing to the brain without opening it is more challenging, but transcranial magnetic stimulation (TMS) and focused ultrasound are already being used experimentally to alter neural activity. With improvements in targeting and resolution, these methods could one day deliver highly specific cognitive changes without physically penetrating the brain.

Between the fully invasive and the entirely non-invasive lies the hybrid approach, where biology meets engineering. Imagine a nutrient-rich polymer scaffold seeded with neurons, integrated into brain tissue at the site of injury or augmentation. This living interface would grow with the brain, reducing the risk of rejection and potentially lasting for decades. Embedded electronics within the scaffold could translate between biological and digital signals, forming a stable, high-bandwidth connection. Such systems could also repair damaged neural networks, guiding regeneration after injury or neurodegenerative disease. The concept is still in its infancy, but early work with organoids and neural cultures connected to electronic devices suggests that biohybrid BCIs might become a practical reality.

Longevity is one of the most pressing concerns for any brain interface. Implants must survive the body’s constant cycles of growth, repair, and immune defense. Wires and electrodes can corrode, shift, or break; wireless systems may lose calibration as the brain subtly changes over years. The ideal solution might be a device implanted at a young age that naturally integrates with brain development, becoming as much a part of the nervous system as the eyes or ears. This would require materials and designs that are both biocompatible and adaptable, able to maintain function over decades without degradation. Achieving this is one of the major engineering frontiers in the field.

Yet, while the technical challenges are immense, the social and ethical questions are equally profound. Who controls the data streaming in and out of your brain? How do you prevent malicious interference? Could brain-to-brain communication make privacy obsolete, or will it require entirely new legal frameworks? Enhancing cognition might deepen inequality if only the wealthy can afford it, or it might democratize intelligence if made universally available. History suggests that such transformative technologies will be both empowering and disruptive in unpredictable ways.

Another challenge lies in understanding the brain’s complexity. The human brain contains roughly 86 billion neurons, each connected to thousands of others. The mind is not simply a processor of inputs and outputs—it is a dynamic, adaptive system shaped by experience, emotion, and context. Writing to the brain effectively means speaking a language we barely understand. Even with perfect hardware, we need the equivalent of decades of neuroscience breakthroughs before we can reliably encode memories, alter reasoning patterns, or add new sensory modalities without unintended consequences.

Still, progress is accelerating. Brain–computer interface trials are multiplying. Algorithms for decoding neural activity grow more sophisticated every year, aided by advances in machine learning. Imaging technology is getting faster and sharper. Invasive devices are becoming smaller and more precise, while non-invasive systems are closing the performance gap. The dream of a seamless, high-bandwidth connection between mind and machine is no longer confined to science fiction; it is emerging, piece by piece, from laboratories and into the clinic.

If we think of the brain as a federation of ~350 specialist “LLMs” spread across three functional hemispheres—two cortical hemispheres plus a deep, third network of subcortical/limbic circuits—the engineering implications become clearer. Reading the brain is not about extracting a single stream; it’s about sampling the right specialist at the right moment and letting a “router” (the thalamus-prefrontal loop) arbitrate which module’s output matters for the task. That is exactly how modern multimodal AI systems work: many expert models gated by a controller that fuses their signals into a coherent action.

Writing to the brain follows the same logic. A cochlear implant already “addresses” the auditory specialist; DBS targets motor or limbic specialists; next-gen surface arrays and micro-electrodes will reach finer “agents” in motor, speech, or memory maps. Non-invasive focused ultrasound and other caps could nudge deeper agents without surgery, while biohybrid scaffolds might one day grow new specialists that integrate with existing ones. Sustainability over a lifetime then becomes an orchestration problem as much as a hardware problem: stable interfaces that can hot-swap specialists, recalibrate the router, and safely update the “weights” of memories and skills—always with strict governance, because upgrading one specialist without the others can distort the whole ensemble. In short, durable brain–machine symbiosis will look less like a single chip in a single place and more like a long-lived operating system coordinating hundreds of neural experts—our biological multi-LLM—through a mix of wired, wireless, and living interfaces.

The beauty of this similarity, the human brain with its 350 specialized neuron networks and the multi-model AI LLM's, is that it also opens up more ways to study the human brain and even simulate it, whether that's for testing medicines or "brain events" like diseases or epilepsie or just to understand how our brain works.

In the end, whether the future of brain–machine interaction is wired, wireless, or biohybrid, its defining characteristic may be adaptability. A system implanted at birth may be continuously upgraded, just as our smartphones receive software updates today. A non-invasive “thinking hat” might be replaced every few years as imaging and stimulation technologies improve. A biohybrid implant might evolve along with its host, changing as the mind itself changes. What matters most is that the connection is sustainable—not just physically, but functionally and ethically—over the course of a human lifetime.

If history is any guide, the first wave of high-function brain augmentation will be imperfect, expensive, and limited to specific applications, much like the earliest cochlear implants or artificial hearts. But with time, refinement, and societal adaptation, it could become as routine as corrective lenses or joint replacements. Whether through a hat, a chip, or a living scaffold, the ability to extend the mind may become one of humanity’s defining achievements. The question is not whether we can connect to the brain, but how—and whether we will do so in a way that truly serves the mind, rather than merely exploiting it.