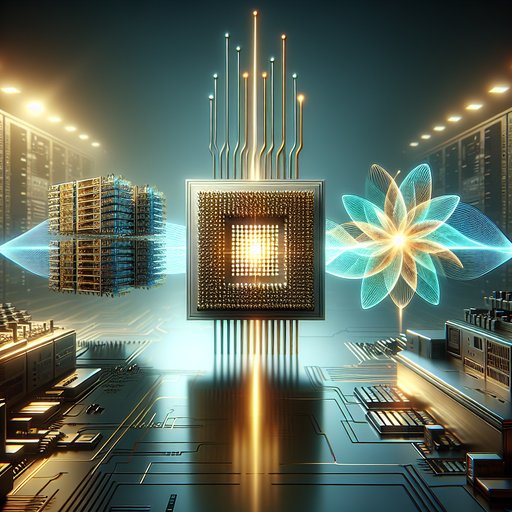

The contest among Intel’s x86 CPUs, ARM-based processors, and AMD’s RDNA GPUs is not a simple horse race; it is a clash of design philosophies that now meet at the same bottleneck: energy. Each camp optimizes different trade-offs—x86 for legacy performance and broad software compatibility, ARM for scalable efficiency and system integration, and RDNA for massively parallel graphics and emerging AI features within strict power budgets. As form factors converge and workloads diversify—from cloud-native microservices and AI inference to high-refresh gaming and thin-and-light laptops—these approaches increasingly intersect in shared systems. Understanding how they differ, and where they overlap, explains why performance no longer stands alone and why performance per watt has become the defining metric of modern computing.

The stakes are high because compute demand is growing faster than power budgets and cooling solutions can keep up. Laptops now chase all-day battery life without sacrificing responsiveness, game consoles target steady frame times under living-room thermals, and data centers face mounting electricity and sustainability constraints. At the same time, AI inference and real-time graphics have made parallelism a first-class requirement on consumer devices. This convergence forces CPU and GPU architects to meet in the middle with hybrid designs, smarter memory hierarchies, and software that exposes low-level control to developers.

Intel’s x86 lineage remains anchored in backward compatibility, translating complex instructions into micro-ops for wide out-of-order cores and high single-thread performance. Recent hybrid generations such as Alder Lake and Raptor Lake add performance cores alongside efficiency cores, with hardware-guided scheduling (Thread Director) to balance responsiveness and power. On servers, Intel augments vector pipelines with AVX-512 and adds Advanced Matrix Extensions (AMX) in 4th Gen Xeon to accelerate AI and HPC workloads. Meteor Lake extends the approach to client SoCs with tiled designs and an integrated NPU, pushing x86 beyond a monolithic CPU toward a heterogeneous compute platform.

ARM’s strategy emphasizes modular IP and licensing, enabling silicon vendors to blend CPU clusters, NPUs, GPUs, and custom accelerators into tightly integrated SoCs. The big.LITTLE concept matured into DynamIQ, letting designers mix performance and efficiency cores for sustained throughput within phone, laptop, and server envelopes. ARMv9 with SVE2 broadens vectorization options while preserving the power advantages that made ARM dominant in mobile. Cloud providers increasingly deploy Arm Neoverse-based silicon—such as AWS Graviton—because predictable performance per watt translates into lower total cost of ownership at scale, while Apple’s M‑series shows how deep vertical optimization can bring ARM efficiency to general-purpose computing.

AMD’s RDNA family refactors GPU compute around power efficiency and latency, shifting from GCN’s wave64 default toward wave32 execution and organizing compute into workgroup processors for better scheduling. RDNA 2 introduced hardware ray tracing and Infinity Cache to reduce external memory traffic, an approach tuned for fixed console power and bandwidth budgets. RDNA 3 extends efficiency with chiplet-based designs—separating the graphics compute die from memory cache dies—to scale performance without ballooning cost or power. The architecture now includes AI-oriented instructions and improves ray tracing throughput, while maintaining a shader-first philosophy that fits gaming and real-time graphics.

Memory systems and interconnects increasingly determine real-world performance, and the three camps respond with different but converging tactics. RDNA’s large on-die cache reduces GDDR bandwidth demands; consoles leverage unified pools so CPU and GPU share addressable memory and eliminate copies. ARM-centric SoCs commonly employ unified memory to minimize data motion across CPU, GPU, and NPU blocks, which is a cornerstone of Apple’s M‑series responsiveness. On x86 laptops and desktops, integrated GPUs and fast interconnects narrow the gap with discrete devices, while modern APIs like DirectX 12, Vulkan, and Metal give developers explicit control over resource lifetimes and synchronization to exploit these layouts.

AI acceleration underscores the philosophical split and the growing overlap. Intel equips servers with AMX tiles for dense matrix math and ships client NPUs to shift background AI tasks away from CPU and GPU. ARM SoCs frequently integrate NPUs tuned for low-power inference and expose SVE2 or NEON for vectorizable workloads when dedicated accelerators are absent. RDNA 3 adds AI instruction paths and leans on shader programs for techniques like upscaling and frame generation; AMD’s FidelityFX Super Resolution demonstrates that image quality gains can be delivered without dedicated tensor hardware.

These choices reflect target markets—datacenter throughput, mobile efficiency, or gaming fidelity—while pushing all sides to balance programmability with specialized units. Cross-pollination is visible in shipped products that blend the philosophies. Game consoles pair x86 CPU cores with RDNA 2 GPUs under aggressive thermal limits, proving that power-aware graphics and CPU scheduling can deliver consistent 4K-class experiences. On the other end, smartphones like Samsung’s Exynos 2200 integrate an RDNA 2–based GPU with ARM CPUs, bringing hardware ray tracing and advanced graphics features to handheld power budgets.

Windows on ARM has gained momentum as CPUs like Qualcomm’s recent designs aim at laptop class performance per watt, while RDNA-powered integrated graphics in x86 APUs raise the baseline for thin-and-light gaming machines. Software compatibility and developer tooling shape adoption as much as raw silicon. Apple’s Rosetta 2 eased the ARM transition for macOS by translating x86-64 apps with minimal friction, showing how binary translation can smooth architectural shifts. On Windows, Microsoft’s x64 emulation and the newer Prism translation layer improve the experience for ARM laptops while native builds gradually expand.

Toolchains and runtimes—compilers, profilers, graphics pipelines, and AI frameworks—now routinely target x86, ARM, and modern GPUs with near-parity feature sets, enabling developers to optimize for power envelopes without abandoning portability. The result is not a single winner but a reshaped landscape where specialization coexists with general-purpose flexibility. x86 evolves through hybrid designs and matrix extensions to preserve compatibility while reducing joules per task. ARM advances as a system-first platform, integrating accelerators tightly and scaling from phones to servers with predictable efficiency.

RDNA continues to raise the bar for graphics performance per watt and adopts selective AI and chiplet innovations, reinforcing the GPU’s role as a power-conscious parallel engine. Together, these trajectories make performance per watt—not peak FLOPS—the metric that decides how future devices are built and how software is written.