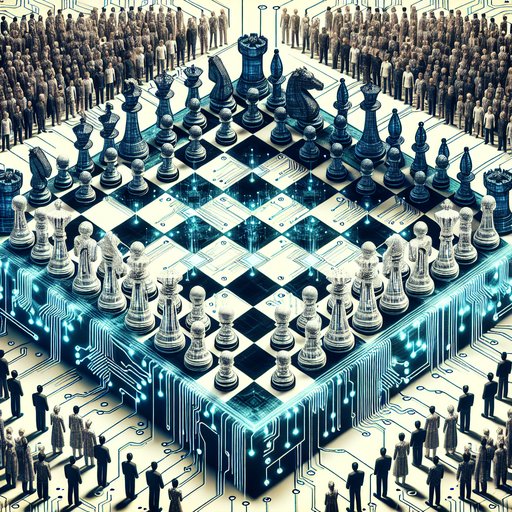

The recent launch of SUPERWISE's AgentOps, a platform aimed at governing AI agent operations, underscores the critical question of who truly controls the algorithms that increasingly dictate our lives. As AI systems become more autonomous and embedded in daily operations, from financial trading to healthcare, the power dynamics surrounding their governance become ever more opaque. This development places a spotlight on the risks inherent in algorithmic decision-making, particularly for those who lack a digital voice to influence or scrutinize these systems.

The introduction of AI governance frameworks like SUPERWISE's AgentOps marks an important step toward addressing the pressing need for accountability in AI systems. As noted by experts, AI auditing services are pivotal for leaders who wish to understand and mitigate the risks associated with AI deployment [1]. Yet, the broader implications of these frameworks on societal power dynamics merit deeper exploration. Who, if anyone, is equipped to oversee the overseers, ensuring that the governance of AI itself remains transparent and equitable?

Historically, technological advancements have often reinforced existing power structures while purporting to democratize them. The industrial revolution, for instance, promised emancipation through mechanization but often resulted in the consolidation of power among the few who controlled these technologies. Similarly, AI has the potential to either bridge or widen existing social divides, depending on who wields the power to govern and regulate it. Directors who lack AI literacy pose significant risks to their organizations, underscoring the need for informed leadership to steer these transformative technologies responsibly [2].

The concept of "Responsible AI" has been championed by tech giants like Microsoft, emphasizing principles such as fairness, transparency, and accountability [3]. However, the practical application of these principles often falls short of their aspirations. As AI systems become more complex, the decline in AI maturity suggests that many organizations are struggling to integrate these technologies effectively [4]. This gap between intention and execution creates vulnerabilities that can be exploited, potentially harming those who are least able to advocate for themselves.

At the recent G7 Summit, a crucial opportunity was missed to make significant strides in global AI governance, highlighting the challenges of achieving consensus on international oversight [5]. This lack of progress at a global level places even greater importance on national and local governance frameworks. Startups like Trustible, which recently raised significant funding to lead in AI governance, offer hope that industry-driven solutions can fill the gaps left by governmental inertia [6]. Yet, these efforts must be inclusive and consider the voices of all stakeholders, particularly marginalized communities who often bear the brunt of algorithmic bias.

Critically, the launch of AgentOps raises questions about the ethical responsibilities of those who develop and deploy AI technologies. With preventative AI poised to transform sectors like health insurance [7], the stakes are high. Without robust governance mechanisms, these systems risk perpetuating inequalities, such as pricing out those with higher health risks through opaque algorithmic assessments. Security and privacy teams must work collaboratively to break down barriers and protect individuals' rights in this rapidly evolving landscape [8].

Moving forward, the path to equitable AI governance requires a multifaceted approach. It necessitates not only technological solutions but also a cultural shift that values diverse perspectives in decision-making processes. Educational initiatives must be intensified to ensure that leaders across sectors can engage meaningfully with AI technologies and their implications. Additionally, public policy must evolve to establish frameworks that hold AI systems accountable, protecting the interests of all citizens, especially those with limited digital literacy.

Ultimately, the launch of platforms like AgentOps could herald a new era of accountability and fairness in AI operations, but only if they are implemented with an unwavering commitment to inclusivity and ethical stewardship. By fostering a culture of transparency and collaboration, society can ensure that AI serves the many rather than entrenching the power of the few. As we stand at this technological crossroads, it is imperative that we prioritize governance models that empower all individuals, paving the way for a future where technology enhances, rather than diminishes, our shared humanity.

Sources

- How Leaders Can Choose The Right AI Auditing Services (Forbes, 2025-06-25T01:17:27Z)

- Why AI Illiterate Directors Are The New Liability For Boards Today (Forbes, 2025-06-26T21:02:01Z)

- Microsoft says “Responsible AI” is now its biggest priority - but what does this look like? (TechRadar, 2025-06-23T14:28:00Z)

- Why AI Maturity Is Declining And Why That’s Exactly What CEOs Need To Reinvent Their Businesses (Forbes, 2025-06-23T11:15:00Z)

- The G7 Summit Missed an Opportunity for Progress on Global AI Governance (Rand.org, 2025-06-23T20:50:00Z)

- Biz Talk: Arlington Startup Trustible Raises $4.6 Million to Lead in AI Governance (ARLnow, 2025-06-27T14:45:35Z)

- Preventative AI Is Poised To Transform Health Insurance (Forbes, 2025-06-24T10:00:00Z)

- How Security and Privacy Teams Break Barriers Together (Zeltser.com, 2025-06-27T19:13:12Z)